I've encountered a very similar situation in so many companies: their IT landscape grew all the time (while nothing was decommissioned), layers they've initially got became more complicated or even split into sub-layers: sometimes parallel, sometimes stacked. Obviously, different components frequently utilize different technologies, so the skill-set required to develop & maintain all that got significantly broader.

Consequences are numerous:

- architecture is complex & hard to govern (kept consistent, maintainable and manageable)

- developing a single, meaningful, end-to-end scenario requires a spike through many layers - various skills & some coordination are needed

IT managers are usually far more afraid of the 1st point. Issues there can screw up whole systems permanently, while the 2nd one usually causes "only" major inefficiencies :) That's why typical IT department in large Enterprise is organized in units that deal with particular tier / layer / technology - obviously this is completely contradictory to the idea of cross-disciplinary, independent (as much as possible) teams promoted by Agile evangelists (& myself). And when I suggest the idea of trying to mix people with different specialization around one particular product area, the usual answer is:

We can't do that. It's better to keep AAA people together (same team, co-located), BBB people together, CCC people together - this way:

- tech knowledge transfer (in their respective area) will be easier

- they all will be doing their tech stuff the same way (same architecture)

If we split them and subordinate them to functional (product-related) leadership - who will be responsible for the architecture in their tech area?

It's a valid reason for being concerned, however, the situation is not that hopeless as it seems (and the game - cross-disciplinary teams - is certainly worth fighting for).

Shared responsibility ain't no responsibility?

Yes, responsibility can be shared. Not in EVERY community. Some degree of maturity, initiative, engagement, organizational skills & technical competence is required. How do you know whether your community is ready for that? If you have to ask / probe, it means it isn't. Why? Because this readiness can be perceived with your own eyes - visible bottom-up initiatives, spontaneous internal marketing, pro-active searching for improvement, raise of natural (skill-based, respect-based) field-level leaders are the indications that your community is ready for this challenge. What if it isn't? Forget I've said anything ...

But won't we break these ideas & initiatives by distributing people from one tech area into different teams? No, if management leaves them some space for perpendicular association & collaboration - perfect examples are Guilds & Tribes at Spotify (you can learn about theme here).

Obviously, if community (or rather - organization culture) is not ready for such a change, it may end in a disaster: sheeple (people who just follow what they are told to do, like sheep) will mindlessly do their work with the minimum effort, without care for something they don't feel accountable for. In such case, architecture deteriorates extremely quickly - there's a great article about lack of well-thought architecture in Martin Fowler's article, so I won't elaborate on that.

Are guilds enough?

Sadly, even if guilds are a great idea, they are not sufficient (even if people really contribute, not just meet for social reasons). Why? Mainly because people are just people, they meet, talk, even find some kind of agreement (they may not have fully understood each other though...), but in the end they tend to part ways without setting actionable items or there are items, but there's problem with finding people to actually execute them. Especially when they are more about governance (inspections, validations, reviews) than creating new, exciting stuff.

Fortunately, not all hope is gone. Governance is (by design) a continuous, tedious, repeatable process, so ... why the hell won't we automate it? And that's the thing Convention Tests and Static Code Analysis tools are very helpful with!

Rise of the robots

What are the Convention Tests? There's a classical series of articles (here, here & here) about that by Krzysztof Koźmic (recalled recently by Maciej Aniserowicz during his talk - thanks for that!). In short words - if you agree (collegially, autocratically, whatever) to introduce some kind of convention into your code (about: naming, dependencies, structure, complexity, using particular language constructs, etc.), why don't you use automated tool to guard / verify them (using reflection, AST analysis, dedicated metadata, etc.)?

You could either use a dedicated tools with a wide choice of pre-set rules (that may be tricky as they may not fit you really) or capability of creating new ones. This is most likely the easiest (but less flexible) way. Few examples:

- SonarQube (generic)

- ESLint (JavaScript)

- FxCop (.NET, regardless of language, because it analyzes bytecode, not source files)

Or you could craft something on your own:

- using Roslyn (yay, another reason to use Roslyn ;))

- using parsers like Antlr - I did that twice, for two different languages, it's less tricky than you think, but some compilator-related knowledge is required

- or just by analyzing code with your platforms reflection or tools like NDepend (that even exposes a dedicated DSL named CQL - Code Query Language)

To make it work, you have to remember about few basic rules though:

- Convention is dead (sooner or later) without the tests - the sooner you make them, the better

- If it's a new convention & it's broken by design in the beginning, make sure that you've got means to track its "brokenness" level, so you're able to observe the trend (i.e. percentage, no of occurrences, etc.)

- Even if you have tests, they are pretty much dead & useless if:

- they are not executed by devs before the commit

- they are not executed in Continuous Integration loop (that pretty much enforces the latter)

- their output is ignored, they are not stable or they suffer any other flaw typical for poorly written normal automated tests

To summarize:

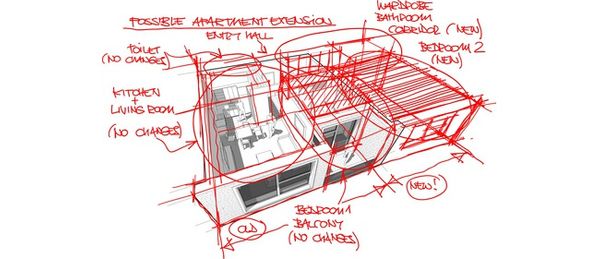

If we're on Software Architecture as Code phase already, why to stand against Software Architecture Health-check as Code? :)