This post is all about how we've decided to revamp our FINAL approach to E2E automated testing in a way that was supposed to maximize the chance of final success. Warning, post contains: AK-47, what's wrong with "tightening the screw", why JavaScript is better than Java (;>).

Previous post in the series can be found here.

Where were we? At the end of part IV, our E2E automation was going nowhere. Or, to be more precise, it was far too slow to get us where we were aiming (Continuous-bloody-Delivery). That's was the perfect moment for an ...

Earthquake

What kind of earthquake (I've called it "intervention" in the last post)? Did I plan to fire someone to shake up others? Nope, that's not really my way of doing things (unless I find it absolutely necessary, because there are actual "bad apples").

There were several ideas considered. The most tempting were:

- hire a "driver" - an external QA Architect (not involved in the previous failure) to own the topic (which would become a "mission")

- tighten the screw (by stricter governance) - e.g. set up weekly targets & enforce their execution; this would help with the mobilization, but also it's not how we're doing things - I prefer owners who want to achieve something, not task-pushers in a neo-feudal work setup

- parallel benchmark - execute an independent experiment to prove that things CAN & SHOULD go faster, smoother & more effectively

You've probably guessed already - yes, we've went for the 3rd option. With the following assumptions:

- make it as comparable as possible (in terms of initial conditions & goals to be achieved) to the "old" approach

- missionary ownership - only highly motivated individuals who don't look for excuses, but blow out all the glaciers on their way

- the "new way" has to be obviously better when it comes to all identified major pain-points of the old solution, namely: more flexible (more people can write tests, not only QA), closer to the Front-End code (so e.g. one commit can contain test & refactoring which makes code more testable), lower entry threshold (we don't want to spend 2 months on initial setup ...)

I had no issue with finding owners, there was a bunch of people who were 100% up for a challenge. But it took us few days to refine the approach as we had to deal with context-specific constraints:

- we have both web & mobile client applications (even if the latter are JS-based, they are bundled as store apps)

- our most popular platform is ... iOS/Safari - believe it or not, but it outnumbers Android few times in our case; it's an issue because Safari has its quirks and very few non-Selenium testing solutions have it properly covered

Anyway, we've done our homework and a new project was born.

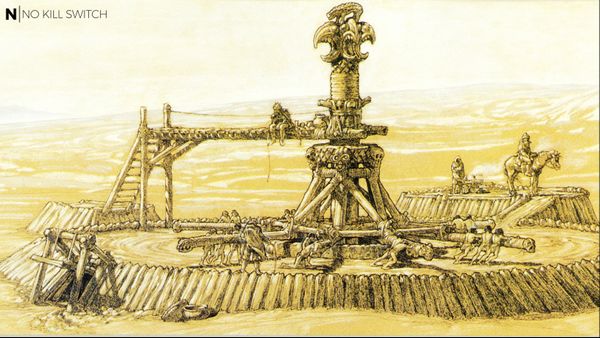

Codename: Kalashnikov

Why such a name? Because AK-47 was exactly what we needed :) An automatic "tool" that:

- ain't really very fancy

- but it does its freaking job while in the same time being: cheap, easy to obtain, resilient, trivial to learn operating it

Kalashnikov's automatic rifle was a near-perfect depiction of our philosophy at this point - we just needed a bunch of rebels with AK-47 who won't spend 3 months on tweaking settings, but will carve their way forward with rapidly dispatched lead. Getting things shot ... done.

What were the key characteristics of the Operation Kalashnikov?

- it was time-boxed (2 weeks only)

- it was done by people who didn't automate before, but volunteered for the mission - it was up to them to define HOW to reach the goal, full autonomy with the clear goal set up up-front; one more thing - they were not of QA but Front-End Developer background: JavaScript web applications is their specialty

- we've abandoned Java stack, moving to JS & Cypress as a test framework - it works out-of-the-box & is much faster (when it comes to both writing & running tests) than Selenium wrappers. For very specific cases that either behave differently on Safari or have something mobile-specific we've decided to use some JS Selenium-wrapper as an exception (Pareto rule ftw).

- Kalashnikov was supposed to happen in parallel to existing test automation efforts, on a separate application that was not E2E automatically tested yet - just to make the experiment more fair (in terms of comparability)

Big Deal

In fact, the whole thing was a very big gamble.

Two weeks do not look like a major investment to cry upon (we had only 3 people involved officially in the topic - few more voluntarily helped in their carved spare time though), BUT I was seriously fed up with TRYING. For me it was the last GO. Solutions that were waiting next in line were dramatically different, wouldn't make me (or anyone else within my teams) happy, but I was running out of feasible options we could have handled internally ...

So, how did it go?

You can find out by reading the next post in the series ...