This blog post is all about: the difference between focus on doing and focus on getting done, importance of owning your own metrics (KPIs), why data can be double-edged sword (but the transparency is still the key), that engineer who doesn't introspect into her/his work can be effective only by pure luck and that falling into "victimship" (instead of trying to fix the issue) has never ever fixed any problem.

Part III of the series can be found here.

Disclaimer: to make this as brief as possible, I've filtered out several side topics - e.g. test pyramid consideration, all the reasoning behind putting UI tests before API tests, etc. The whole story I'm depicting here is FAR from complete picture, but well ... it's going to be a blog post series, not a "The Lord of the Tests". :)

I've just checked - that's crazy, but I haven't posted about "Our Continuous Testing odyssey" since October! And even then, my posts were not really 100% up-to-date with situation at the time :) I think it's the highest time to catch up a bit ...

TL;DR on the status where the story stopped:

- we've revisited (& validated) scope of regression testing, ...

- ... introduced a clear duty split (rotational to avoid anyone getting jaded) with a visual roster to make it public

- ... prioritized the automation plan based on amount of manual work to be reduced

- ... minimized the amount of "operational" QA work, EVEN when we knew it will increase the Test Debt (e.g. we did create only minimal number of new test cases for new functionalities, we didn't design new data for DB seeding, Front-End & Back-End Developers did a lot of cross-testing, instead of QA - who were doing automation, etc.)

I've also pushed to collect (reasonable amount of) data since the beginning - but (as future told me ...) I was the only one who actually understood the impact of that ...

Btw. - this details was not that relevant at that point, but will be important later - QA Engineers have picked their own tech stack for automation. The one they felt the most comfortable with (Java + TestNG + Selenium), BUT that meant they were the ONLY Java engineers in the organization. I didn't object - they were working on it for few months before I've even joined the organization & I didn't have any clear proof that it will be a significant issue (all newly recruited QA were tested for their Java-fu).

Data is a double-edged sword

We've announced the big moment ("we're starting! for real!"), applied the roster to everyday work & started measuring the progress (just that - number of automated test cases day-by-day). Sounded like a reasonable beginning.

Frankly? I was certain of success: stable foundation, secured workforce, situation under control - with Kaizen attitude (continuous, short-feedback-loop-based learning) it was an inevitable success.

One.

Two.

Three.

Four weeks have passed. Without a significant issue.

I've kept checking the trend & made sure it's updated daily.

For some organizations a month is nothing, their absorption of the change period is at least half a year. For us (a rapidly scaling upstart) a month is like an era. For me it was the highest time to draw some conclusions. Some of them were very predictable:

- in case of any emergency (someone sick, Sprint increment in jeopardy), QA automation (as "aspirational" kind of work) was the first to suffer

- so the approximate average (this was NOT tracked in detail) headcount of people doing the actual automation was 2 instead of 4 (less than expected, still enough to get something tangible done)

Some other conclusions were actually more surprising: learning curve was not visible in the trend! the velocity of creating the new test cases was:

- constant

- embarrassingly low

And we were not gaining speed :(

I've shared my observations, indicated my concerns, asked for some more data, re-emphasized the goal, but didn't raise for alarm and decide to watch carefully for 1 more month.

Nothing has improved. Quite the contrary. But there were worse news.

Misalignment

What is more, QA team didn't actually understand the concept of "owning this metric" (QA automation progress + time saved on automation) - they didn't realise that this metric matters! They were fully convinced that the fact that they spend all their time left after manual testing on writing the automated tests is good enough - they've felt satisfied that they can write some code (instead of having to stick to manual testing all the time).

But they've missed few CRITICAL points:

- they were automating because we've decided to start accumulating some Process & Test Debt - NOT because automation saved us time! This could NOT last forever

- they were creating far less automated test cases during the Sprint than the number of NEW manual test cases (for UI automation) produced by developers during the same Sprint! this means that two accumulated metrics would never converge! (even keeping in mind that we want to automate only the most crucial scenarios)

- zero learning curve effect over 1 month proved that there was something fundamentally wrong! either lack of motivation fogged everything or people were still struggling with basics (poor skill) or poor focus (e.g. gold plating) or insane cost of maintenance of what was being added with every new test ...

What was worse, no-one in the QA team seemed worried ;/ They were not in a mindset of considering this THEIR PROBLEM. No-one but myself asked what did the trend-line mean ...

Syndrome of a besieged fortress

There was not a single indication that we're one inch closer to the goal (Continuous Delivery) BUT no-one was asking questions, no-one was bringing up ideas. In fact QA team has become extremely defensive:

- they've started feeling offended that the metric was exposed to the whole organisation (transparency FTW ...) & e.g. moved all their discussions to private Slack channel (?!)

- they didn't like the fact that developers started to ask inquisitive questions regarding what are WE ALL getting out of these automation efforts

- they felt very insecure when exposing their tools (automating framework) to others - in spite of weekly nagging, UI tests were not integrated into delivery pipeline

- they've treated all the questions & ideas aimed to help them find out the root cause of the problem as some sort of attack; none of the open offers of help got any answer (!)

We are a flat organisation that encourages everyone to take the ownership over some topic & expects everyone to co-define her/his role. We respect people autonomy to pick their own tools and define "the HOW" of their problem solving path, but ...

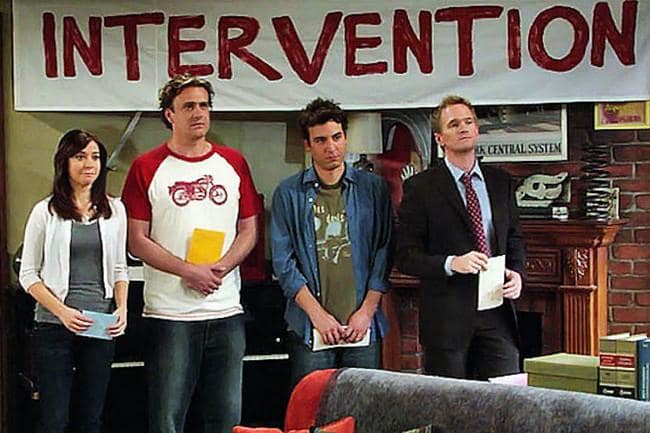

... at this point it was more than clear that the problem won't solve itself & there's a need for an ...

Next post in the series can be bound here.