Part I can be found here.

TL;DR - maybe thinking in "processes" is a relic of traditional, policy-driven, hierarchical enterprise? Decentralised model, based on business events & reactions tends to have some advantages & seems to match modern architecture principles better. WHAT IF we ditch BPM completely?

Is there a better way?

There are at least few scenarios that seem to fit modern principles of software architecture significantly better than classic BPM:

-

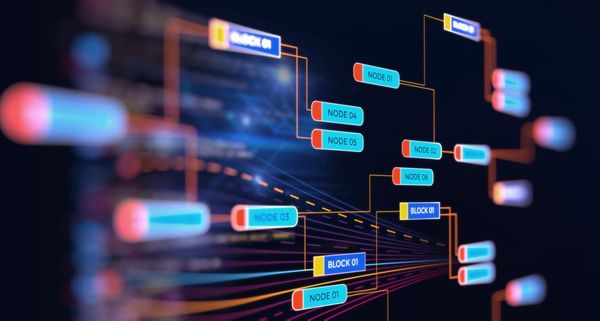

API gateway with domain-driven API, orchestrating particular microservices - in such a scenario all the interactions are dispatched & controlled via orchestrator(s), that effectively span as an additional layer of processing (services). Logic is expressed in raw code, so here's the drawback: parallel gateway "services" can be very heterogeneous

-

API gateway with generic, workflow API, where logic of particular activities is encapsulated in workflow engine-specific building blocks (DSL, framework constructs, etc.) - in this scenario clients pretty much interact with the engine only, what activities can do is strictly limited to framework capabilities (makes it FAR easier to govern, FAR harder to extend) - this is pretty much like writing your own, simple BPM (well, more like a state machine, configured with API instead of GUI)

-

Distribute orchestration with domain events' pub-sub - if you know A. Brandolini's Event Storming, that's pretty much the derivative of this approach: each business operation within microservice should be triggered be meaningful business event ("new employee registered", "limit amount approved", "incident escalated to manager") & as a result it can modify its own, internal state & raise more events (w/o explicit targets, it's pub-sub after all).

Frankly, I've tried option #2 few times & I was never fully satisfied - both #1 & #3 seem a much better choice. #1 is much simpler to develop (but may be tricky to govern & maintain), while #3 has a benefit of being less coupled, more flexible & testable (on the other hand, it's not a process perspective).

Process'es identity

However, there's still a remaining issue of workflow instance's unique identification (e.g. for the tracking purpose) & state persistence. When we had a single BPM it was a no-brainer, but what if we don't?

Even if we immediately reject the idea of having a dedicated, mutable workflow state store (because of issues with versioning & tracking), there's no single, perfect solution:

-

One idea is to remain ardently domain-driven: if real-life workflow is traceable, it has a real-life way to identify it & keep (associate) its specific data - in such a case it should be an element of one of the sub-domains. And of course it may mean that various workflow sub-types will have different approaches. In my past experience usually it was not a workflow that has its identity, but the work item that workflow was all about.

-

Alternatively (and in some cases - additionally), one can identify entry points, introduce technical IDs, pass them through all the processing (all the layers) & persist (append-only) all the events (+their data) flowing. That will help to track history of each workflow instance, but won't help with anything related to future steps.

Decentralising - what do we lose?

But, but, but, don't these ideas above (except #2) lead us to losing the assumed benefits of having a centralised workflow engine?

- common task model (+authorization, +SLA control, +transition rules, etc.)?

- clear, model correspondence between business process & its "digital" implementation (incl. proper visuals)?

- ability to fine-tune process "on-the-fly" w/o involving excessive number of (technical) people?

Yes.

Wait, no...

Some. Maybe...

It depends! :)

Common task model

Common task model is an unfilled designers' wet dream :) Purely theoretical, highly virtual, quickly dissolves into myriad of sub-variants when confronted in reality. In those very few cases when it doesn't, it's because domain itself is specific: very standardised, repeatable, thoroughly inspected. But in such cases it should be a part of sub-domain model.

However, there are some aspects of common task model that can be easily shared, because the are commoditised & generic - e.g. authorisation model, tracing history of changes. Fortunately, you don't really need workflow engine for that.

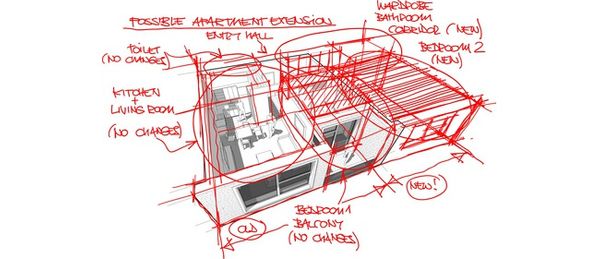

Model correspondence (& agility)

This is actually a valid point, because it's not only that user wants to easy find out where is (s)he in the process flow, but also in many cases it's highly desired that application will guide user through the process (in a fool-proof way) w/o making her/him feel that (s)he has lost control.

For first 2 scenarios the case is rather simple - workflow logic has its representation either in the custom code or framework-specific constructs - visualisation logic & its metadata can follow the same route. It's the 3rd scenario (the most tempting one ...) that has an issue here ...

Hence my answer is as follows: scenario #3 promotes decoupling all the way & as the consequence one has to change her/his thinking from process perspective to event + reaction(s) perspective:

-

processes are outdated abstractions: complex (with a tendency to grow even more complex), hard to optimize, even harder to version properly, boundaries between them are sometimes more virtual than real (e.g. same resources used)

-

business events are much easier to map to real-life encounters, they are also clear & measurable, may be easily shared between "processes", BUT optimisations (e.g. queue depth) on single step level may be worthless

-

reality in average enterprise is more complex -> work happens within complex mesh of daily interactions & so-called "processes" are key, chosen sub-paths (sequences of events & reactions) within the mesh . These are not always the same, they change over time & have many variants, but what really matters is:

- clear beginning & end of flow we're interested in (e.g. because it's where value stream is)

- to be able to trace all the activities that have happened (so we can look for bottle-neck, examine inventory, etc.) on the way

I strongly believe that switching one's perception to such a model:

- makes flow evolution easier (as changes are smaller, decoupled & can be incremental)

- provides higher flexibility in terms of what we measure & compare (we're free to choose sub-paths w/o much limitation)

- scales much better - there are no "processes" to aggregate excessive complexity over time

Coming soon (in the next posts): what if we already have a BPM?

Pic: © ag visuell - Fotolia.com