This blog post covers: why boundaries constitute the structure, why E2E/Integration tests are not enough, what does it even mean "Boundary/Contract tests", who should create such tests & why and how to match expectations of more than one consumer.

P.S. All the contracts covered here are supposed to be internal. No multi-tenancy, no general-purpose APIs. Nada.

Having a modular split within an application/system is absolutely crucial (beyond some scale), but ... like everything in this world, this comes with certain price to pay & challenges to tackle:

- boundary correctness - are boundaries set reasonable (to maximize the internal cohesion & minimize the external coupling)?

- detecting breaking changes - when working within a local module we rely on boundary contracts, but how can we minimize the risk that contract stops meeting our expectations?

Both topics are very interesting, but for the sake of this blog post, let's just focus on the 2nd one.

Theoretically the answer is simple and based on combination of 2 techniques:

- agreed scheme of versioning (e.g. semantic versioning)

- Integration/E2E/System tests that do span across the boundaries of single components/modules

Sounds legit & clearly enough in many scenarios, but ...

- versioning schema warns us only about the potentially-breaking changes in format itself (e.g. RESTful resource field structure), but it usually doesn't cover domain invariants that can't be expressed in format; or some sequentiality of operations (temporal coupling) or stateful logic between the operations that's not expressed directly in data (behavioral coupling)

- Integration/E2E/System tests are awesome, but as they are more expensive to write/maintain & slower to run, their coverage is usually more narrow & the feedback (from running them) is far from instant (e.g. up to 1 full workday)

Fortunately, there's a solution - quite a natural one.

TDD on the level of area/component - Boundary/Contract Testing.

What's Boundary/Contract Testing?

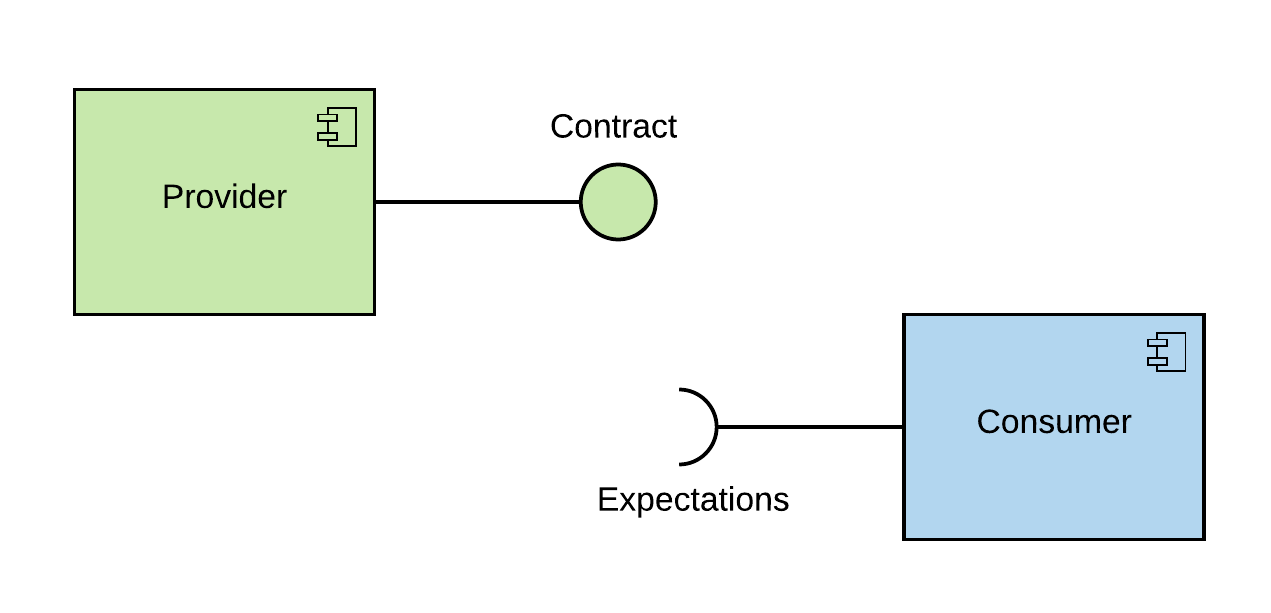

It's all about expressing our expectations (as a consumer) regarding exposed contract of what we're going to consume

AND

stating our obligations (as a provider) regarding what we are exposing.

Wait, wait. Devil is in details.

So ... who should write such tests? Consumer or a provider?

Are they supposed to run on a working component? Or rely on mocks?

Who should be running them? And what should happen once they're broken? (e.g. who should fix these)

All vital questions, let's try to tackle them.

Responsibility/duty split

There's a clear asymmetry around contracts. In fact BOTH parties (provider & consumers) do need contract tests, but for different reasons:

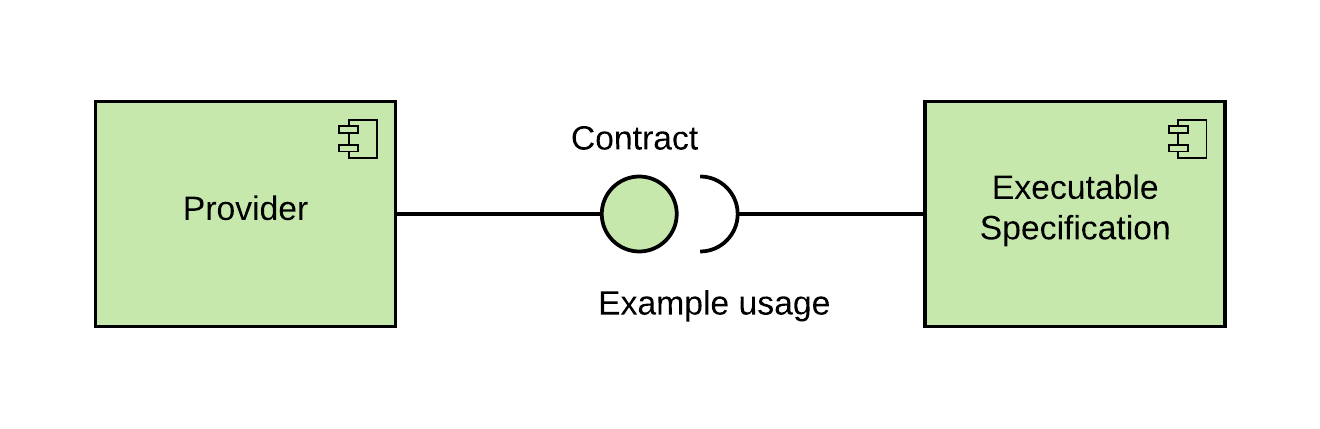

- providers need tests to validate & document the usage of contract-specified API (aka executable specification); internal module contracts do not have to be consumer-driven, frequently they are determined by the specifics of the capability encapsulated within module (like it or not)

- consumers need tests to secure the non-interrupted & correct usage of the provided functionality - to prevent the situation where provider does a change they are not prepared for

That's why I consider the 1st group as internal kind of tests (anyone else than provider uses them only as a reference/documentation) ...

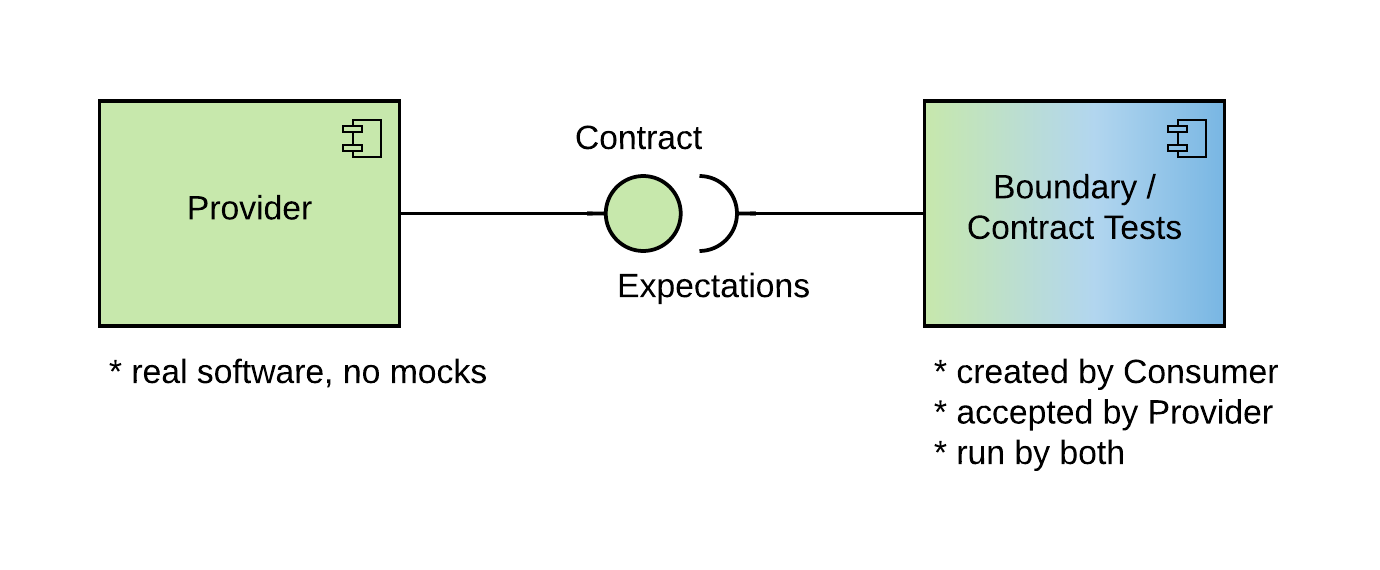

... & the 2nd group as actual contract tests (with both parties playing a vital day-to-day role within a work flow) which:

- should be created by consumers - to express their expectations regarding the contract

- should be kept at the provider (sent as a PR by consumer) - to make sure that changes in consumption patterns are approved & acknowledged (by the provider)

- should be run against real, running instance of the (provider's) component

- should be run by both consumer & provider, to verify that integration conditions didn't change

Btw. the terminology is taken from classic, synchronous scenario (provider vs consumer), but it applies in asynchronous, pub-sub scenarios as well (provider becomes producer, consumer becomes subscribers).

Seed of uncertainty

Hmm, but doesn't it sound a bit ... unpractical?

There may be more than 1 consumer (e.g. more than 1 consuming component using provided functionality) - does it mean that all of them should have a separate suite of boundary tests?

In general - that's a reasonable default - they all declare/specify THEIR expectations which may:

- be different initially (no-one says each component has to use WHOLE contract, right?)

- evolve in different directions (or at least - with different pace) in time

Wouldn't it also be more practical (in fact - some do it already) to provide a stub, provider-implemented "simulator" of provider contract implementation everyone could use to validate their assumptions?

It sounds like a good plan but: (a) provides additional inertia (because of stub distribution); (b) makes you test an "artificial" stub, instead of real test - which may be a problem because the purpose of contract tests is not really to validate the basics (these are carved in the format), but more contextual details.

An overkill?

Yeah, I know - many teams speak about contract tests, but fewer do actually implement them. So maybe it isn't really that important?

It becomes important in time - once system/application reaches certain size, the knowledge management becomes a huge issue: it's physically not possible to have a single person able to cover all the processing end-to-end throughout the whole platform (re-trace all the dependencies across all the components).

Efficient error trouble-shooting or even reasonable-speed system development require jumping across levels of abstractions - to rise beyond some level you need to treat your components/modules as black boxes with very solid foundations - strong & maintainable boundaries - ones that will make components behavior predictable & will guarantee that issue is "encapsulated" (limited to particular component or particular interaction) - so it can be bulk-headed within a single component while having a minimal impact on the rest of the system.