In this article you'll find: what is "capability", why UI-driven application design is sh*t, why conceptual layering is important, which parts of software are less change-friendly & whether it's a problem, why "fibers" are the single worst anti-pattern (even if you hadn't heard about them until now ...).

I don't even remember where & when I've written those words down. It was one of the conferences or meet-ups I keep attending regularly, but I can't recall which one. It doesn't matter though, the point is that I've noted down one-liner that is striking enough to near-perfectly phrase what I was struggling to express in much longer form:

"Good design is adaptive, not predictive."

I think you've intuitively got the meaning, there's no need for clarifying it word-by-word, but let me do it my way, from a different perspective I personally find more suitable in this case:

- good design IS NOT about specific use cases (end-user interactions) FIRST - these are always just hypotheses which may be sub-optimal, inconvenient, ambiguous or just not intuitive enough

- good design IS about (core) capabilities FIRST - atomic abilities of the "platform" (or whatever powers your applications) that determine the palette of possibilities for use cases you can build upon them

Tinker & create capabilities (features of your domain model), make them as much independent as possible, distinguish capability's core functionality from side effects, manage them well (document, apply versioning if needed), build and start continuously fine-tuning application layer over them - APIs, GUIs, TUIs or whatever is preferred in your case - keep polishing those to make your users happy.

Or e.g. if you're using Event Storming: discover domain events, match them with corresponding commands, locate what aggregates they are related to - these aggregates & commands do define your key capabilities.

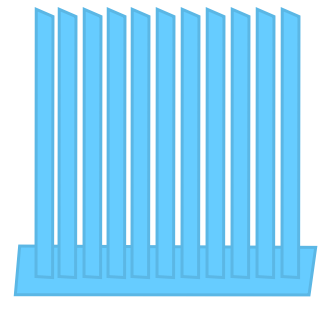

If you do that correctly, the "engine" (capabilities) will remain stable - changes that do not affect your domain will not affect it at all or the range of changes will be well isolated. All the frequently moving parts will be the visible (user-facing) ones (which are definitely much easier to adjust & if well decoupled - won't break the business logic).

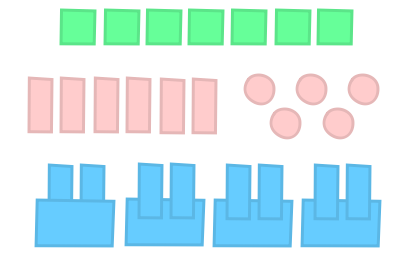

Unfortunately there're so many companies that are too focused on (as they think ...) speed of delivery: their development is strictly UI-driven & under the "agile cape" they follow a strictly waterfall-ish routine by creating single-use-case "fibers" through all the architecture layers, e.g.:

-> Visual + Interaction Design

-> Static HTML

-> Front-End Components

-> API to serve the data

-> Adjustments of the ORM & Data Model (as needed)

This works very well in the first few months (greenfield has yet to accumulate its technical debt), but it generates insane inertia - I call it "fiber-based development" (that's the topic for a separate blog post itself ...). Code build this way lacks ANY REAL design (except UI/UX design which is just a fraction of what should truly happen) & is far too rigid to allow fast adoption of new ideas in future.

As a result, existing code is not getting properly adjusted (to reflect the domain-related changes), but engineers keep adding new "fibers" in parallel to existing functionality (dead or still alive) which makes any kind of meaningful refactoring even more expensive ... So it goes, rinse & repeat.

Learn this lessons:

- don't skip/overlook the DESIGN

- the oldest law of Computer Science - DIVIDE & CONQUER - applies as well as it always did - not only in splitting code into smaller chunks but also (most of all!) in systems design

So build truly adaptive software, by modeling your solutions into layers of abstraction that do represent separate conceptual "layers" of your domain model: contexts, key domain terms (/entities), core capabilities (around these terms), distinguished stateful processes, etc.