The struggle against coupling doesn't just seem eternal. It IS eternal. Multi-dimensionality (compile-time, deploy-time, run-time, ...) of the problem, easiness of breaking the state that was achieved with the great effort, complexity of validation / monitoring (try to quantify coupling in a way that makes sense ;>) - all of these don't make things easier. Especially when you realize that sometimes you've got snakes in place you've considered relatively safe & harmless - in my case: contracts.

By contract I mean shared metadata used to describe format of data exchanged between endpoints.

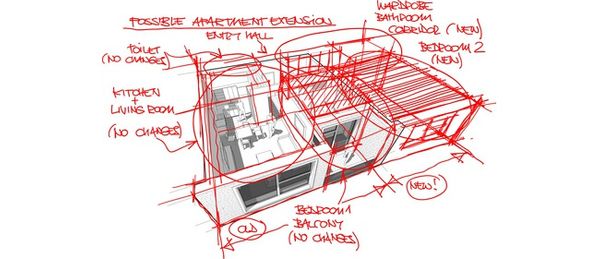

- There are several options to maintain contracts (platform- / language- / tech-agnostic) during their lifecycle:

- well, you can keep contract on owner (supplier) side & re-generate it on-demand on other (consumer) side (based on what supplier actually publishes)

- you can share actual source / binary of the contract specification as an artifact

- you can maintain the contract on both sides (manually), based on mutual agreement, open standards & helper tools like Swagger

I was always all for contract as an artifact, but this approach, when not done 100% properly, has serious flaws (necessary re-deployment) - so, right now I'm more leaning towards the last option. Contract as an artifact is absolutely terrific if your priority is to automate deal with the dependency, but huge behemoth with high deployment automation is still a huge behemoth after all.

-

Unsurprisingly, the simpler the contract, the easier maintenance gets:

-

Message-driven > RPC-alike

-

One-way > Request-Response

-

Human readable, atomic type-based > Vendor-locked proprietary formats

There's not much to add here I believe. Message-driven APIs are also bread'n'butter of asynchronous communication, one of the foundations of Reactive Applications paradigm. Traditional RPC, synchronous, request-response communication may theoretically be very convenient as it looks much like extending traditional method call over cross-machine boundaries, but naively thinking that it just works that way means completely ignoring fallacies of distributed computing - effects will be very painful (in terms of system reliability, responsiveness & scalability, of course).

- And there's also one more aspect, one that gets forgotten pretty frequently - detecting (& announcing) the moment in time when consumers have to adapt to breaking changes in the contract. The easy-going way is to do that on every change, but it's most likely an overkill, because in some cases adaptation may mean recompilation and redeployment (and as a result, massive inertia & additional effort).

Fortunately there are few basic rules that will help in preventing that:

- use semantic versioning, yes I can't emphasize that much enough - use semantic versioning!

- when making non-breaking change, don't require contract update on downstream party (consumer) ...

- ... or even avoid having contract as an artifact by using REST over HTTP

- slap run-time version sync assertions (just in case)

- automate testing your contracts (like that)

- follow DDD principles in designing your API domain-wise (to make it less brittle)

Flawed contract maintenance may not be a big issue if you're integrating 2, 3 or even 4 applications, but if you seriously think about decomposing a clusterfuck of monolithic BBoM you can't really afford taking this part too easy. Even your applications are well defined & deployed in a repeatable, stable way - being "over-prone" to meaningless (technical, not actually business-oriented) API changes means that you're dealing with Bug Ball of Distributed Mud, that's even worse than a traditional one.

Pic: © mgkuijpers - Fotolia.com