I hate starting a blog post without a clear statement or a question to be answered. But sometimes it's just the best way - hopefully things will clear out for you during the reading.

No more "free lunch"

Technology is advancing like crazy since I can remember. Until quite recently, I was buying a new computer every 2-2.5 years. CPU frequencies, RAM, disk space - all of those were exponentially increasing. Cost of new hardware may have been painful, but in exchange - every next generation was clearly faster & more powerful. Sometimes the best way to solve the performance issues with software was just ... to wait few months, until next generation of processors was released.

But ... this is not happening anymore.

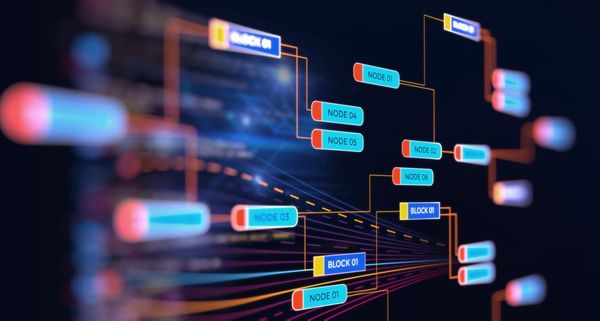

At least not in the same way it did before. We still get more powerful computers once in a while, but they are not "faster" in this direct way we knew before ... Due to physical constraints, frequencies are not increasing anymore, processors get more & more cores instead. The future of computing is all about manycore / multicore architecture: massively parallel, distributed (at least in some sort) & out-scalable. Look around, checking your smartphone spec should be enough - it's not future, it's today.

If you want to read / listen about that a bit, professor Kevin Hammond from the University of St. Andrews had a very interesting lecture about the present of multi-core architectures at Lambda Days 2015 - it should be available as video here soon.

More powerful ~ more performant?

Why should we care? More cores with constant CPU frequency still means higher computing power, right?

Yes. Yes, "but".

It's higher computing power only if you can parallelize your program execution within concurrent processing streams (threads, processes, etc.).

"Easy peasy, my .NET framework does that for me - it gives me ThreadPools, Tasks & all the other fancy async stuff."

My ass it does.

Everything works as long as:

- you're not sharing anything between the threads: resources, objects in memory, anything

- you can efficiently handle the processing streams having the cores you have (more threads than cores = more switching & less efficiency)

Basically, sharing enforces locking (let's skip message passive alternative for now), locking kills performance & causes deadlocks (& as a result - timeouts - with all their consequences). It works that way within:

- a single processor (multi-core)

- multi-processor computers (welcome to NUMA world!)

- in a fully distributed model, where locking morphs into wicked sick distributed n-phase commit strategies ...

Does it hurt software creators that much these days? Depends.

Appetite for services

Even mid-level class servers are powerful enough to handle huge traffic & massive load generated by tens of thousands of users (simultaneous). That's good enough even for large sites, but not for the biggest ones (social networks, global retailers or other service providers). But this situation is not carved in stone. Two, three years ago load was generated mainly by people using desktops, but today, we live in the era of mobile devices - our smartphones & tables are heavily contributing to increase this load even more (well, we use them all the time ...). Tomorrow (or even today) it will be wearables. Next week - maybe the Internet of Things - which seems to be about completely different scale of needs: even your washing mashine, fridge, dishwasher & zillions of sensors spread around - all of them will communicate, push & poll information at unprecedented scale.

Your IT systems will be the ones to handle that.

So, even if you don't need to care about the efficiency of concurrency in your programs today, don't take it for granted when thinking about tomorrow.

Obviously, there will be shitloads of applications / services that will stay exactly as they are now, but completely new needs & expectations will arise and they are likely to shift the software development we know these days.

... to the rescue!

What can we do to get ourselves ready for this grim vision?

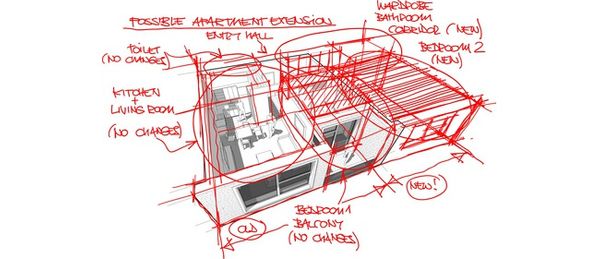

Basically (and now we're getting to my idea for this article) we have to adopt & synnergize the bunch of recently hyped buzzwords :) We can joke about that, but actually - it's the honest truth. It's actually very interesting - they are usually considered individually, but the way they synergize seems very beneficial.

Namely (in a random order):

- Share nothing

- Fail fast

- Immutability

- Asynchrony

- Microservices & Hexagonal Architecture

- Actor model

- Continuous Delivery

- Reactive Applications

- Message-driven integration

- Functional Programming

- CQRS & Event Sourcing

The details will follow in Part II - to be published within few days.